# <-----------Network----------> #Time netKBi pkt-in netKBo pkt-out 10:02:07 76465 51531 1757 25695 10:02:08 117243 79194 2698 39454 10:02:09 117272 79117 2698 39463 10:02:10 116091 78362 2662 38955 10:02:11 117296 79112 2698 39466 10:02:12 233610 157622 5372 78566 10:02:13 116137 78351 2668 39040

Before I answer that, look at the following samples taken once every 0.2 seconds. Now we're not only seeing rates of 500MB/sec, we're also seeing 4 intervals of no transmission.

# <-----------Network----------> #Time netKBi pkt-in netKBo pkt-out 10:04:02.009 0 0 0 0 10:04:02.209 587357 396329 13482 197219 10:04:02.409 0 5 0 0 10:04:02.609 0 0 0 0 10:04:02.809 0 0 0 0 10:04:03.009 0 0 0 0 10:04:03.209 587102 396524 13505 197539 10:04:03.409 0 0 0 0 10:04:03.609 0 0 0 0 10:04:03.809 0 0 0 0 10:04:04.009 0 0 0 0

This is also a good time to mention the -on switch, which stands for options: don't normalize. It tells collectl not to normalize to KB/sec but rather just report the total counter value for each interval. Now for a 0.2 second interval you will see 100MB every 5th interval and also note if you use -on for a 5 second interval you'll again see 500MB. Be sure to try these experiments yourself!

collectl -sn -oTn -i5 waiting for 5 second sample... # <-----------Network----------> #Time netKBi pkt-in netKBo pkt-out 10:05:32 586720 395908 13501 197477 10:05:37 587072 396107 13507 197586 10:05:42 582604 393122 13400 196009 10:05:47 587208 396175 13513 197644

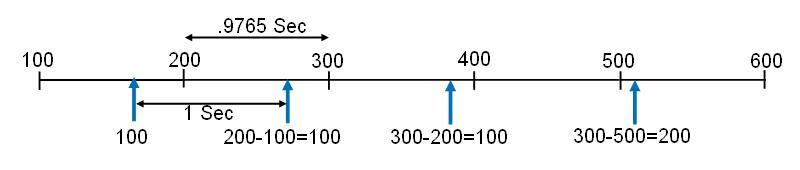

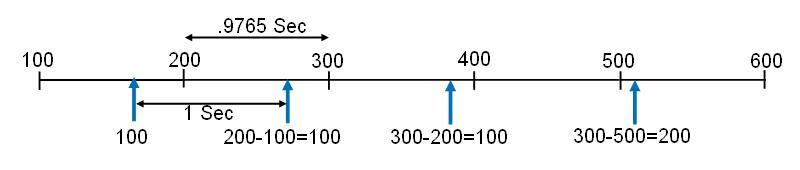

OK, so what's the second point? This one is more subtle. It actually turns out that some drivers are not requesting statistics as close to 1 second as they should and in fact are only requesting them about every 0.9765 seconds. Furthermore, since one interval incorrectly reported double the rate it should have, where did the extra 100MB come from? This actually results in under reporting the rates for all the other intervals. If you now look at the following samples you'll see higher traffic rates which are also more accurate.

collectl -sn -oT -i.975 waiting for .975 second sample... # <-----------Network----------> #Time netKBi pkt-in netKBo pkt-out 10:08:13 119821 80908 2754 40289 10:08:14 119711 80757 2746 40163 10:08:15 119823 80881 2754 40275 10:08:16 119802 80805 2750 40230

On top of the time line we see the network counters being updated from 100 to 200 and so on. On the bottom on the line we see what an observer sees who looks at the network counter once a second. As you can see, during the first 3 intervals the counter is seen to increment by 100 and so the rate gets reported as 100/sec. However during the 4th interval, the counter is seen to increment by 200 and so the wrong value is reported.

However what if you want to run at monitoring frequencies of less than a second, say every 1/2 second or even less? Some drivers actually let you change their update frequency with ethtool. The command ethtool -C eth0 stats-block-usecs 500000 will in fact set that value to 1/2 second.